Mega Project

Luna - Assistant • Hive • Chain

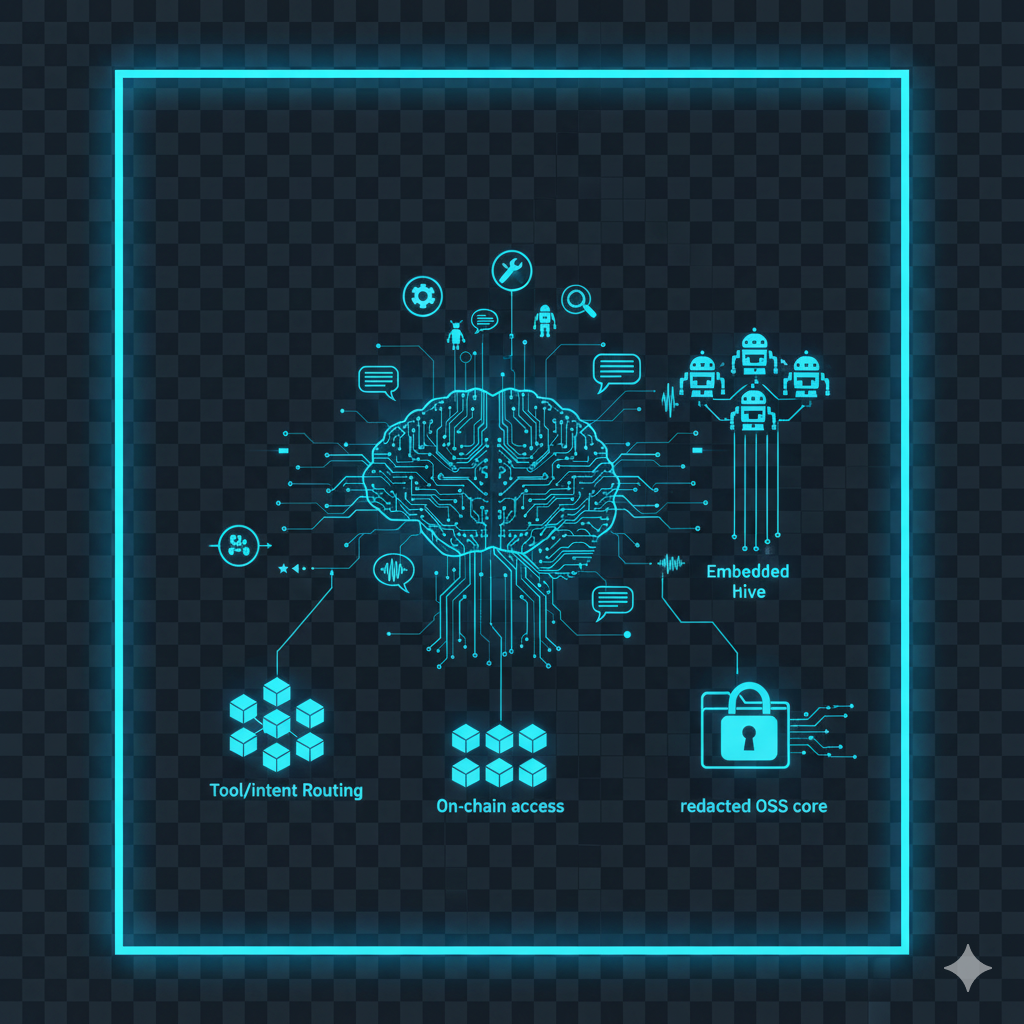

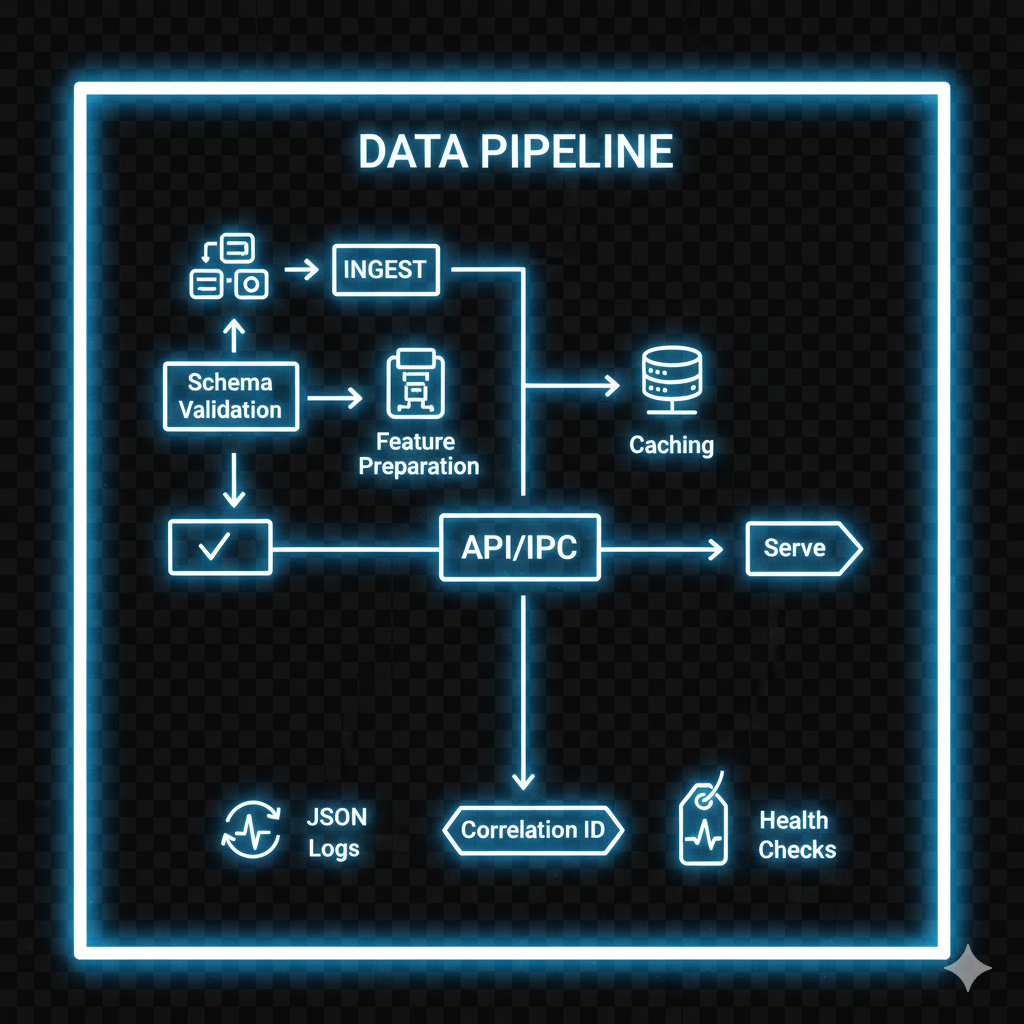

A next-gen home or edge assistant that orchestrates devices, powers a robotics hive, and exposes a build via opt-in on-chain access - with a redacted open-source core.

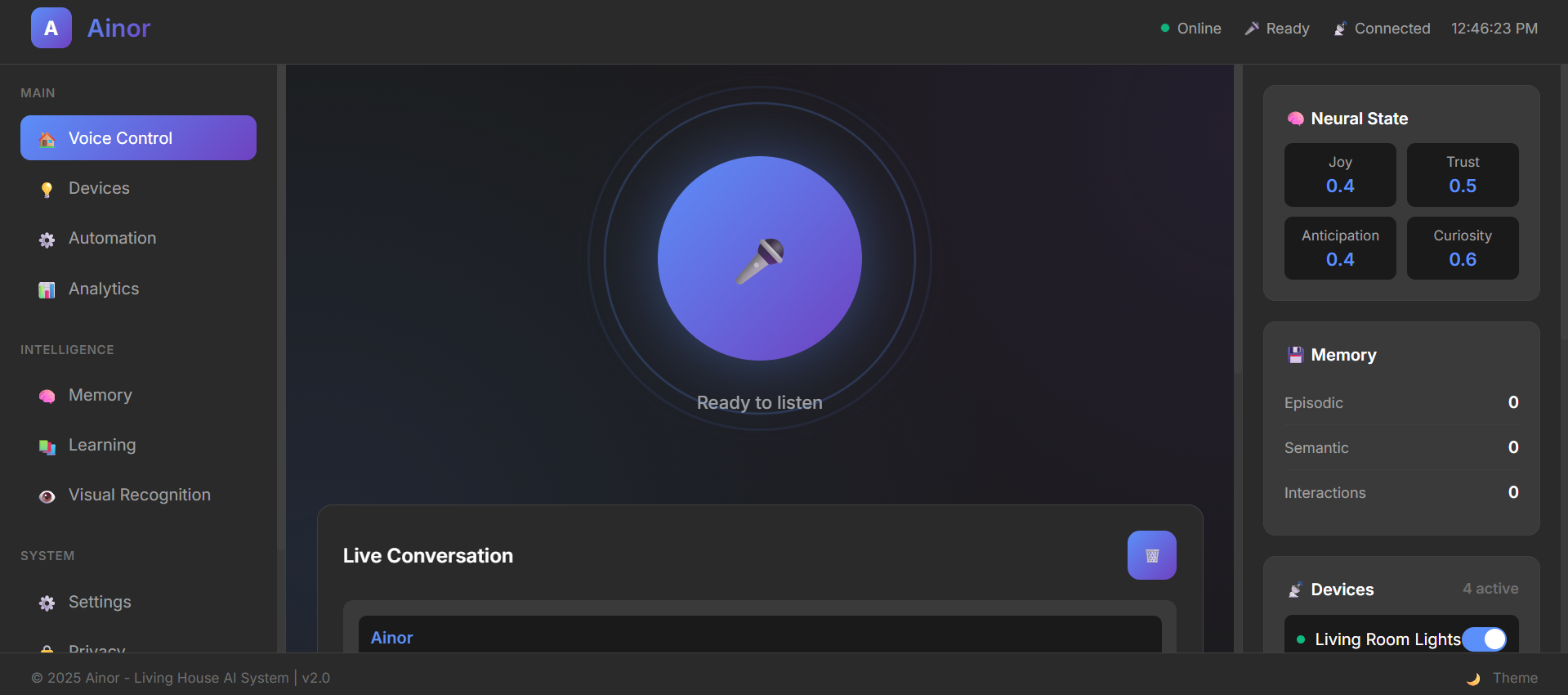

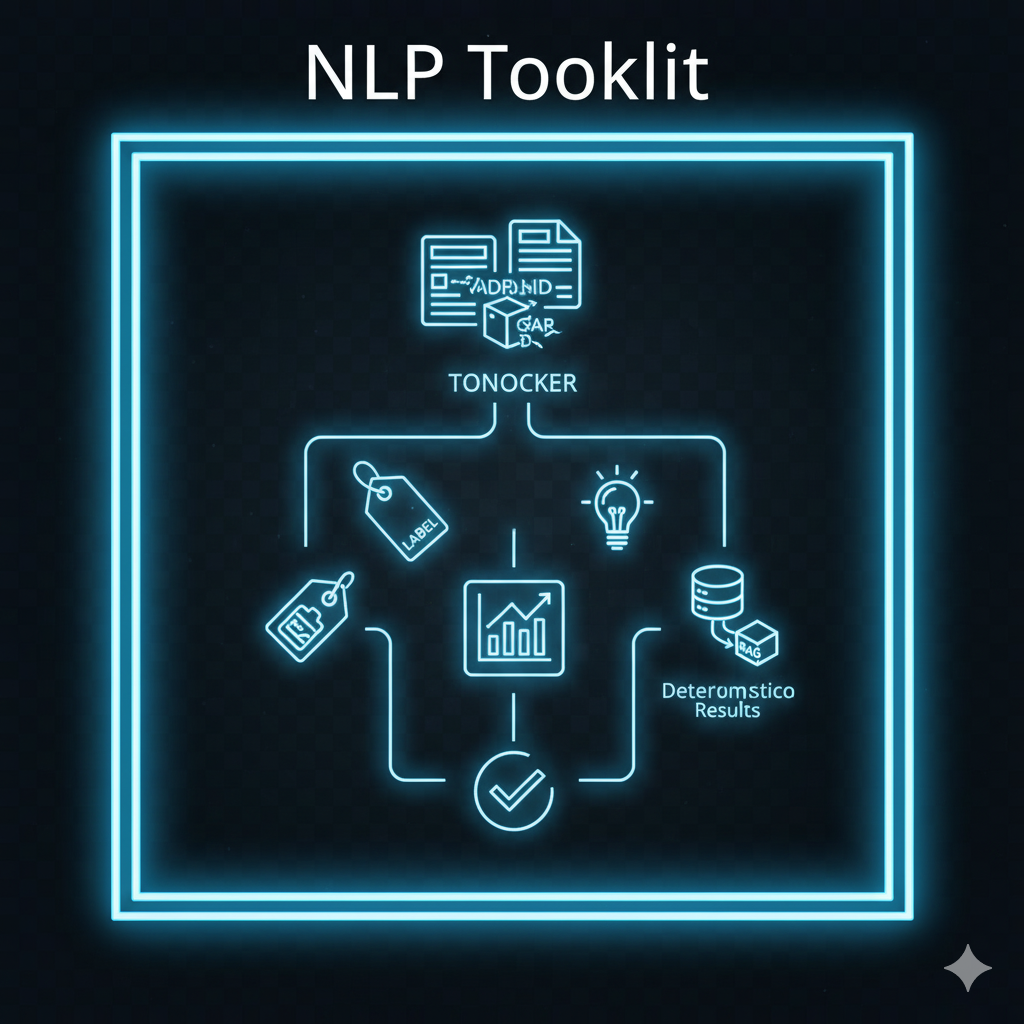

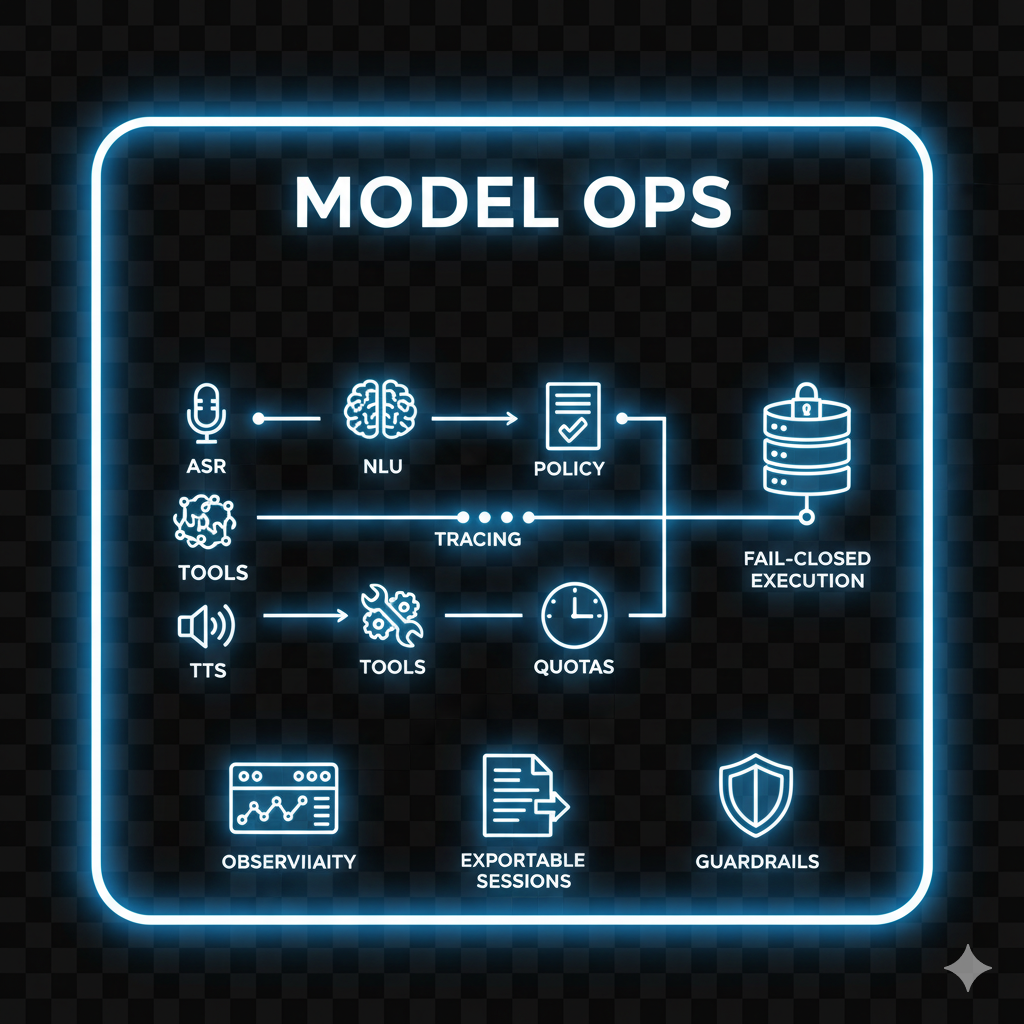

- Assistant Core: streaming ASR ↔ TTS, barge-in, intent and tools, privacy modes, guardrails.

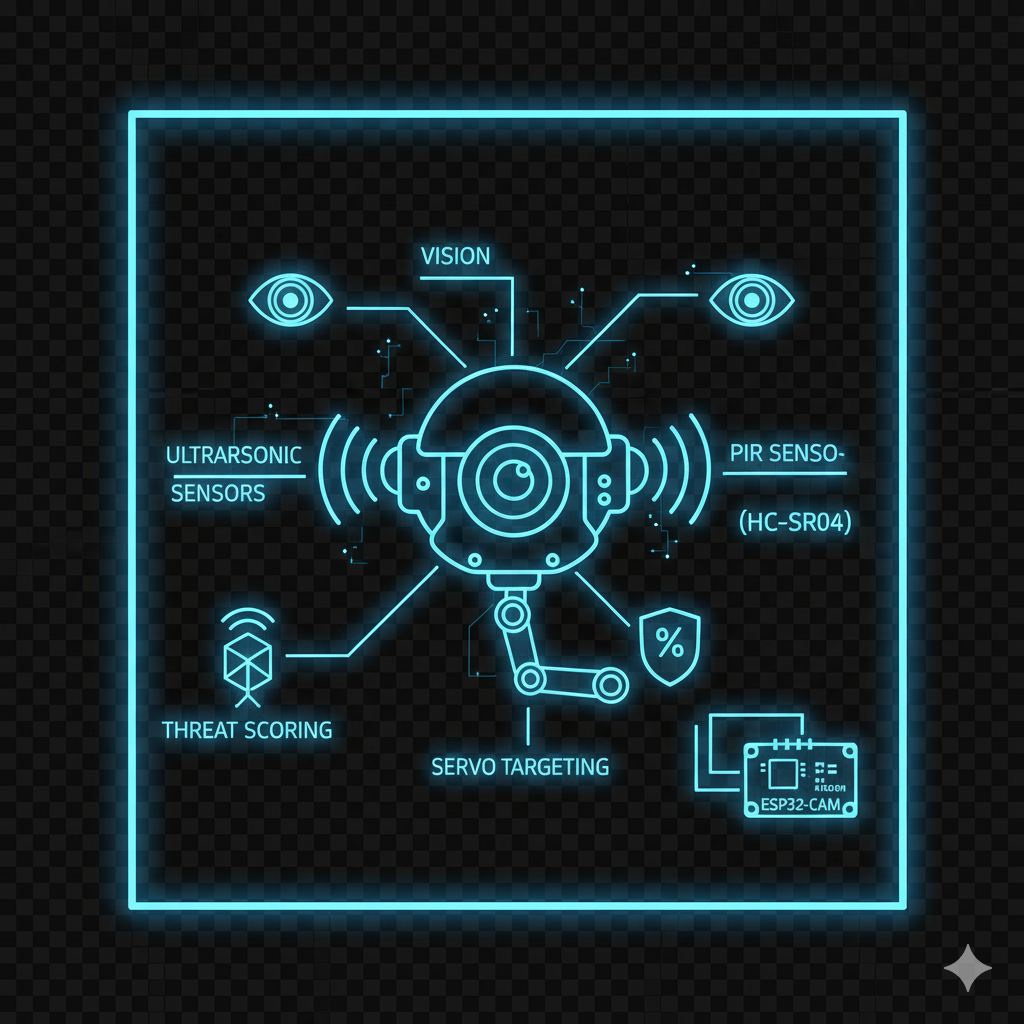

- Embedded Hive: controls AceBot, X-EYE, VIGI; shared state bus, policies, watchdogs.

- Chain Access: token or NFT-gated utilities inside a supervised environment.

- OSS Core: redacted, production-minded edition for local builds and extensions.